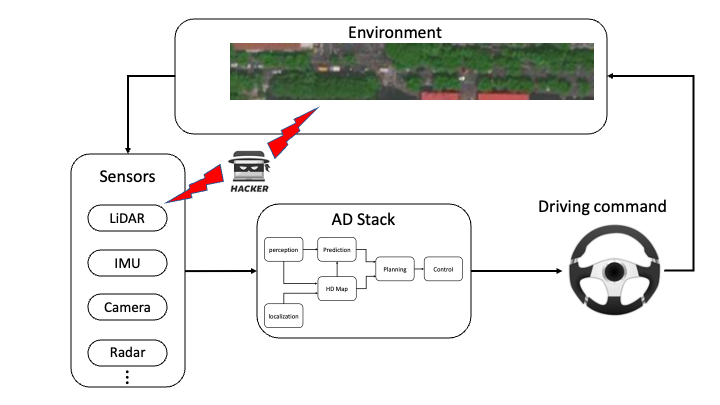

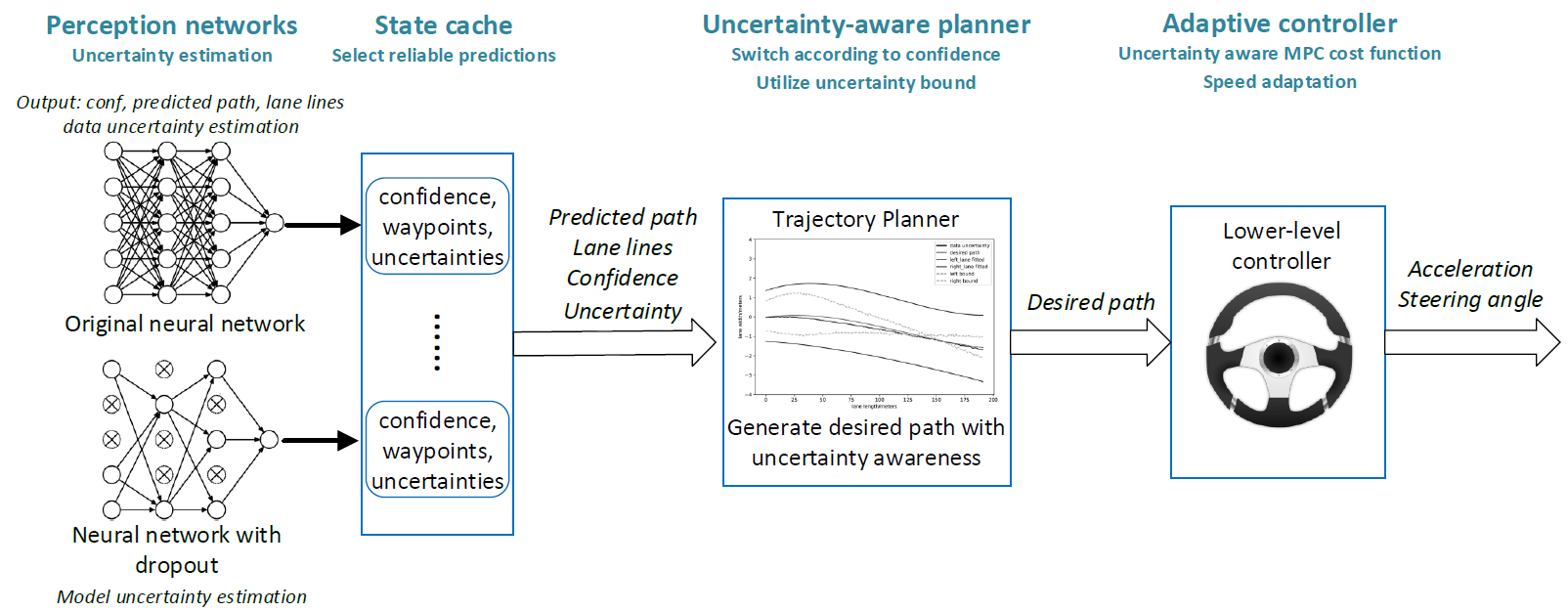

In the development of advanced driver-assistance systems (ADAS) and autonomous vehicles, machine learning techniques that are based on deep neural networks (DNNs) have been widely used for vehicle perception. These techniques offer significant improvement on average perception accuracy over traditional methods, however have been shown to be susceptible to adversarial attacks, where small perturbations in the input may cause significant errors in the perception results and lead to system failure. Most prior works addressing such adversarial attacks focus only on the sensing and perception modules. In this work, we propose an end-to-end approach that addresses the impact of adversarial attacks throughout perception, planning, and control modules. In particular, we choose a target ADAS application, the automated lane centering system in OpenPilot, quantify the perception uncertainty under adversarial attacks, and design a robust planning and control module accordingly based on the uncertainty analysis. We evaluate our proposed approach using both public dataset and production-grade autonomous driving simulator. The experiment results demonstrate that our approach can effectively mitigate the impact of adversarial attack and can achieve 55% ∼ 90% improvement over the original OpenPilot.

Localization is a key component for the safety of autonomous driving vehicles. In practice, multi-sensor fusion (MSF) algorithms have been extensively used to accommodate sensor noises. However, most MSF algorithms focus on the accuracy instead of safety and security. Recent works show that spoofing GNSS signal can jeopardize the correctness of MSF based localization. In our work, we propose an adaptive localization framework that monitors the statistics of input sensor data, detect potential abnormal behavior and dynamically adjust key parameters for MSF. Experiments are conducted in a closed-loop simulation frame. The result shows that our proposed method can effectively mitigate the impact of GNSS spoofing attack.